Composr Tutorial: Improving your search engine ranking

Written by Chris Graham (ocProducts)

For most websites, it is important to draw in visitors. There are many ways to do this, and one of these ways is by improving your site's 'findability' in search engines; this includes presence in the results for keywords appropriate to the visitors you wish to attract, and your actual position in the displayed search results.It is worth noting at this point, that it is my view that search engine ranking is only one small part in the 'visitor equation'. There are many ways to attract visitors, including:

- having a good domain name for the subject matter (e.g. buyspoons.com for a company selling spoons)

- having a domain name that people can easily say and remember (e.g. amazon.com, ebay.com, yahoo.com, google.com, microsoft.com – note they are all two syllable, and 'flow' and/or relate and/or bring up imagery)

- having a URL that is given out directly or close-to directly, such as a business card, or a sign

- Internet banner (or similar) advertising

- 'placement' of your URL in a prime location, such as in a directory, or made available by someone who has it in their interest to 'pipe' users to you

- online or offline word-of-mouth, either by you, or by your visitors

- quality of content, such that your site becomes the place to go

- associate your site with an official cause. A site which is somehow the natural place for people following this cause, will naturally get more hits.

Table of contents

How web crawlers work

Search engines work via one of four techniques (or a combination):- manually maintained directories (this is now mostly a thing of the past, e.g. OpenDmoz was shut down)

- accessing databases (e.g. Google uses Wikipedia)

- web crawling (e.g. Google has one of the best web crawlers)

- aggregation/taking of data from other search engines (e.g. Yahoo used to use Google)

A web crawler is a tool that will automatically find websites on the Internet, via hyperlinks to them. The basis of the process is very simple:

- the web crawler starts with a set of pages it already knows

- the web crawler goes to these pages, and:

- indexes the page

- finds what hyperlinks are on the page, and remembers them

- stores the pages it has found in its list of pages it knows, and hence these pages will themselves be crawled, and so on

It is because of this web crawling process that it is important to be linked to. If there are no links to your website on pages that themselves are crawled, it might never be found unless you tell the search engine about it by hand. Google uses a 'PageRank' system which actually factors in the number of links to a page as a part of the result ranking of that page (on the basis that a page with more links to it is more popular and therefore more relevant).

Techniques

Composr SEO options

- Use good, accessible and 'semantic' HTML, and lay-out your site in an accessible fashion. By default, Composr has support for the highest level of accessibility, and has perfectly conformant HTML (except in certain areas where conformance is not possible, due to web browser limitations). By providing a well constructed site, search engines will be able to index your site more appropriately and thoroughly, and they may regard it more highly. It is a convenient parallel that accessibility features to help the disabled, also help search engines (especially site-maps and alt-text)

- Set well considered meta keywords and descriptions for your site as a whole, and for individual items of content that you consider particularly important. Try to make it so that your content and keywords correlate: if your keywords also appear in your text, search engines are likely to strengthen their ranking of your site against them. Composr will try and automatically pick a list of keywords for any added entry of content if you do not specify them, but you should hand edit this list if you have time

- Use good page titles for your Comcode pages. Composr will use page titles as a part of the actual title-bar title of your site, thus allowing you to get keywords into a prime position of consideration for search engine crawlers

- Likewise, use good hyperlink titles

SEO options for Composr content

- Get keywords into your own URLs (the choice of domain name, the URL path, and the URL filename). Composr's "URL Monikers" feature will help here

- Get your site linked to by as many quality sites as possible: the more popular the sites, the better (don't get linked to on "link farms" though). This is not just good for the obvious reason of getting hits from those sites, but will also make search engines think your site is popular (in particular, the google PageRank algorithm basically bases website popularity on the number of links it gets)

- Add your site to directories or ranker sites, including large directories of any kind of website, and small specialist directories relevant to your website

- Make sure your XML Sitemap is being generated (you will need the Cron bridge scheduler working for this), and submit the sitemap to Google. This is described in more detail in the next section

- Submit your site to additional search engines (Google, Yahoo, Microsoft and Ask are automatic via the XML Sitemap); note that when you submit a site to a search engine, you are only giving it optional information, as they are designed to find websites regardless of whether they are submitted or not. You might want to pay for a service that submits to multiple search engines for you, but be wary of this: search engines may mark these submissions as spam if your submission does not match that search engines submission policy (many of which in fact exclude submission from such bulk services). Note that some search engines require payment for listing

- Don't waste your time submitting to obscure search engines

- Do not 'cheat' search engines by making pages with a lot of hidden words: search engines will penalise you for it

- Make a lot of good written content, so search engines have more to index against. Of course, this will also add to the quality of your site

- Use the URL Schemes feature, as search engines will then see pages opened up with different parameters (to access different content) as separate. This means they are more likely to consider them separately

- Use dedicated "landing pages" that you can link to from other websites. A landing page is just a normal page that you intend to point people to. For example, you might make an article landing page that is linked to from a directory of articles. The purpose is to give search engines something extra and real to index without distracting your primary visitor flow

- Set aside time for regular testing; test the right pages show up when doing searches, and the wrong ones don't

- Make sure your site has a presence on social media (e.g. Facebook and Twitter)

- If targeting an international audience, consider having high-quality translations done of your content so that non-English search engines have some food to pick up on (Composr has excellent multi-language support)

There are many rogues and companies out there that will promise unachievable things when it comes to getting high search engine rankings: a site that a user would not want to visit and respect, is not going to be able to abuse search engine ranking schemes easily. Our best advice for this is that you should focus on quality, not cheating the system: quality is easier, and cheating will usually end up working against you.

Content techniques

If you do want to put in a lot of tuning effort to content, here are some additional techniques:The key is to understand the goals of the page, yes, you want to get Internet users to come and look at your page, otherwise its just an exercise in personal writing. What do you want them to do when they get there, is it just an information page or are you trying to get the visitor to buy/do something.

Here are a few things to bare in mind

- Research keywords and select the most appropriate for the topic. This should be used within the Page title, your main title and within the first 25 words of text. Composr automatically makes title you put in for news the article title (Heading1) and the metadata title.

- Establish useful synonyms. Rather than repeat the same word or phrase over and over again establish a list of useful synonyms so that you don't keep repeating yourself.

- Use Heading 1 (H1) and Heading 2 (H2) tags, these should both relate to the topic of the document and should work together. Composr will automatically add the H1 tag for you when you fill in the page title. There should only ever be one H1 tag on a page.

- Use contextual links. When you link to one of your pages make the text which is linking the same as the page title or related to the topic of the page. For example if you page is about orange juice make the link be orange juice. This helps build an authority within your site, Google understands that you are saying that page is about orange juice.

- Link to the new page from a first level page. A first level page is anything that is linked directly from home page and the homepage itself. A second level page is everything that is linked form a first level page. Usually a good drop-down menu will achieve this for you.

- Use the key word/phrase within the alt attributes of Images. For example "a picture of a glass of orange juice" might become "A picture of a glass of orange juice on a table at ocProducts".

- Try and write at least 250 words, as far as google is concerned the more keyword rich text the better for relevance.

- Don't just add lots of meta keywords because you think they might be good to have, if they are not relevant to the page you are working on leave them out. 15 to 20 keywords is more than adequate.

- The meta description should be no more than 150 Characters as Google will cut this off after this point. It should include your main keyword/phrase. For example if the page was about ocProducts and our love for orange juice, "This is a page written about the ocProducts staffs love for orange juice and their ongoing search for the best tasting orange juice in Sheffield"

- Ideally the keyword density should be around 5-7% of the page. (and never above 10%). This means 7% of the words should be related to the keywords and phrases.

- Copy should seem natural and not forced. If your website copy is unreadable to site visitors no-one will stick around or link to you.

- Search engines read top to bottom on the page so make sure you use the keywords & phrases near the top of the page.

- Be first: if you can be the first to write about something then that counts in your favour. This is difficult to do in a lot of cases; don't decide against writing something because its already been done.

- Searches look for key phrases more often than singular words, so try and include full phrases too. For example the page may be about "Orange Juice" but a user may search for:

- Smooth Orange juice

- Natural Orange juice

- Orange juice with bits

- Orange juice cartons

- Fresh Orange juice

- And many other possibilities

- Use cross links on your site. If you have a page about Apples on your site where you mention apples make it link to the apples page. You don't need to do it more than once on a page so if you refer to apples three times only one needs to be made a link.

XML Sitemap

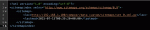

Composr uses a sophisticated indexing system for the sitemap to grow efficiently over time. This screenshot shows the index file, which is what is fed to the search engines.

- Yahoo

- Microsoft

- Ask

Composr will generate a very thorough Sitemap for your website, providing links to all content, even forum topics (Conversr only).

Composr will also automatically submit the Sitemap to the above search engines every 24 hours, so long as the CRON bridge scheduler is enabled and the 'Auto-submit sitemap' option is on. Configuration of the CRON bridge is described in the Basic configuration and getting started tutorial. You will know if it is not working because your Admin Zone front page will say so.

If you cannot configure the CRON bridge scheduler for some reason, you can force generation of the sitemap from Commandr:

Code

:require_code('sitemap_xml');

build_sitemap_cache_table();

sitemap_xml_build();

To test your Sitemap is generating correctly, open up http://yourbaseurl/data_custom/sitemaps/index.xml. You should see an XML document with reference to individual sitemap(s).

Important note about Google

For Google to accept the automated Sitemap submissions you need to first submit it via Google Search Console. After you have done this, future automated submissions will go straight through. Google Search Console is a great tool in its own right to see how your website is performing on Google.

Alternatively you can reference it in your robots.txt file, as shown in the Security tutorial.

URL Monikers

The Composr URL moniker feature will put keywords into the URLs instead of ID numbers, which is great for SEO but also makes the URLs more comprehensible to humans.The keywords are found by processing some source text, typically the content title.

The URL monikers option can be enabled/disabled via the "URL monikers enabled" option.

Note that URL monikers will be given a leading hyphen if the source text is fully numeric. This is because URL monikers cannot be numbers as they would look like raw content IDs.

One small disadvantage of URL Monikers, is it makes it a bit harder to find out ID numbers, as you can't just look in URLs. However, you can still find them on edit links, or if you look in the HTML source you will often see:

Code (HTML)

<meta name="DC.Identifier" content="http://yourbaseurl/data/page_link_redirect.php?id=_SEARCH%3Asomemodule%3Aview%3A13" />

Underscores and Dashes

Any underscores (_) in page names will automatically be replaced by dashes (-) in URLs, as this is the convention for URLs.This happens regardless of configuration.

Google Search Console

Google Search Console lets you monitor search engine crawling activity on your website. Other search engines often have similar systems.You first need to prove ownership of a particular site via an automated process, then the range of tools become available to you.

Stay calm

People often have questions regarding Google Search Console because it displays various errors and warnings and people fear these impact SEO.Most errors displayed have nothing to do with hurting your general search ranking, they are only warnings about specific URLs, rather the site as a whole. Any non-trivial site will have many of these errors, if only because sites change over time and Google has old URLs in its database. Google obviously is not going to penalise all these websites, and won't penalise you for some untidiness either.

It's important to understand that Google doesn't do a lot of negotiation about what exactly it should do with your site. There are a few standards that kind of give it hints, like robots.txt, canonical URLs, nofollow headers, robot meta tags, the XML sitemap, and it doesn't put URLs containing HTTP errors into its search index, but generally what it does is it trawls through your site repeatedly, noting down all the URLs it finds. It then remembers the URLs and will continue to try them in the future, regardless of whether they are valid anymore.

Often access-denied errors show up. You should not think of Google being given a error as something necessarily wrong with your site. It simply means Google made a request, and received an error response. That is a normal and legitimate thing to happen, especially if it is a 401 "access denied" response. It's a normal part of the web, not a system failure. This is a subtle but important point: Google getting a 401 response is not like a police caution, it is normal and instructional. It is an error with respect to that URL not being accessible, but not an error with respect to a system failure or even implication of a bad link (it is valid to give users a link that returns a 401 response in some cases, for example, telling them to log in).

An anally-retentive attitude isn't wholly bad for SEO, especially if you are tuning pages or crafting how things are linked together – but it doesn't work well if you take Webmaster Tool warnings out of context, as something that needs to be eliminated 100%. Google Search Console provide these warnings to help you review things in general.

Of course, if you are seeing an error on a particular URL and you want that URL to be crawled, you should look into it.

A note of caution regarding agencies / Can you really get a google top result?

A lot of web design companies make a very bold claim: that they can get you a Google top result.It's a strong seller. Everybody wants to dominate their competitors in Google and get 'massive traffic' for 'huge profits', and sit back while their website 'runs on automatic'.

It's also a claim that is true. These companies can get you a Google top result.

There's just one catch: it won't be for the term you want.

Anybody can get a Google top result for something particularly obscure.

For example, we are top on Google for the following terms:

- web design sheffield "world class" functionality

- composr web development

- tired of partial solutions

- cms web development "projects partnerships"

- best CMS

- web design sheffield

- reliable web developer

So, if you are shopping for web design and someone is telling you that you'll get a top Google result, don't expect it for "London carpenter" or "best chauffeur", expect it will be something far more obscure. It may well still be a term that does get a modest stream of searches though (Google's keyword suggestion tool is great for finding 'long tail' searches that people actually do but aren't particularly competitive).

Some companies do actually say they can get you a top Google result for any search term of your choice. Thinking logically, the only ways they could do this are:

- Doing it only if you have an infinite budget (with infinite resources, you could incentivise infinite quality back-links and invest in whatever is required to make the website the most compelling one ever built)

- Bribing or Hacking Google (seems very unlikely)

- Calling a paid Google ad a top Google result (likely very common)

So to summarise, do not take the stupid claims many web companies make on face value. This is particularly a problem with lower quoting companies, who have a business model that requires treating their customers like sheep and subjecting them to a flashy sales process that makes a lot of shallow but impressive claims. These companies are legitimate in the sense that the model allows them to do stuff cheaper than anyone else and to a basic reasonable quality, within the budget of very small businesses, but it's important to also have an understanding of what you get for the money and why they are so cheap.

A little closing advice

SEO is certainly a strong possible marketing route for many websites. Two scenarios are particularly strong:- for small businesses that serve a local community. These businesses can actually work off their local-nature to great advantage (imagine a query involving the name of their trade, with the town they operate in appended on the end).

- for websites with a lot of community-generated or database-generated content. These sites will naturally end up targeting a large volume (a 'long tail') of less common terms that people do search for but aren't so competitive. A bit of work exposing the appropriate terms automatically and you can have a very effective strategy.

Concepts

- SEO

- Search engine optimisation: the process of improving a website's ranking in search engine results

- Crawler

- The 'work horse' of search engines that moves between links on the world-wide-web, finding and analysing web-pages

- XML Sitemap

- A standard format for listing the pages of your website, recognised by all the major search engines

See also

- URL Schemes in Composr

- Basic configuration and getting started

- Helping improve site accessibility for disabled users

- Metadata

- Submit to Google

- Submit to Bing

- Sitemaps specification

- Google Search Console

- https://www.werockyour…moz-vs-raven-vs-majestic/

- moz.com

- SEMrush

- Ahref

Feedback

Please rate this tutorial:

Have a suggestion? Report an issue on the tracker.